Instrument Range vs Span What is the Difference

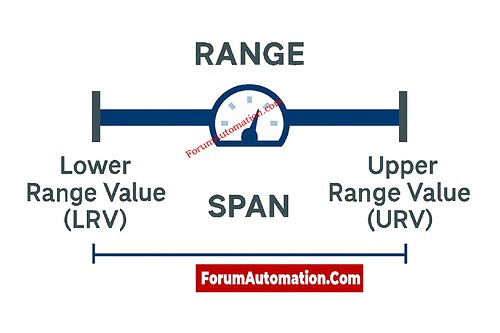

In instrumentation, “range” and “span” are two phrases that every technician and engineer employs on a daily basis, although they are sometimes misinterpreted. Although they are closely linked, they define two distinct features of a measuring tool.

Instrument Range The range specifies the minimum and highest values that an instrument is intended to measure. It is expressed as a beginning and an end point.

For example, if a pressure transmitter is rated from 0 to 10 bar, this is the measuring range. It indicates the limits to which the instrument can accurately sense and create a consistent output. Different applications necessitate different ranges based on projected operating conditions, ensuring that the device does not saturate or become overloaded.

Instrument Span The span represents the difference between the upper and lower range values. It defines the whole measurable width of the instrument’s range. Applying the same example:

-

Range: 0 to 10 bar

-

Span = Upper Range – Lower Range = 10 – 0 = 10 bar

If the instrument is ranged 3 to 15 psi for a pneumatic signal, the span is 12 psi.

Key Difference

-

Range = the actual boundaries of measurement

-

Span = the numerical difference between those boundaries

Understanding the distinction is critical for calibration, scaling, and configuration. When you set a transmitter’s lower-range value (LRV) and upper-range value (URV), you define the range, and the span adjusts itself based on those two points.

Accurate usage of these terminology reduces confusion when describing instruments, performing calibrations, or troubleshooting loop readings.